Science

Peaks and Valleys of a Lost World: Mapping the Continent Beneath the Ice

28 January 2026

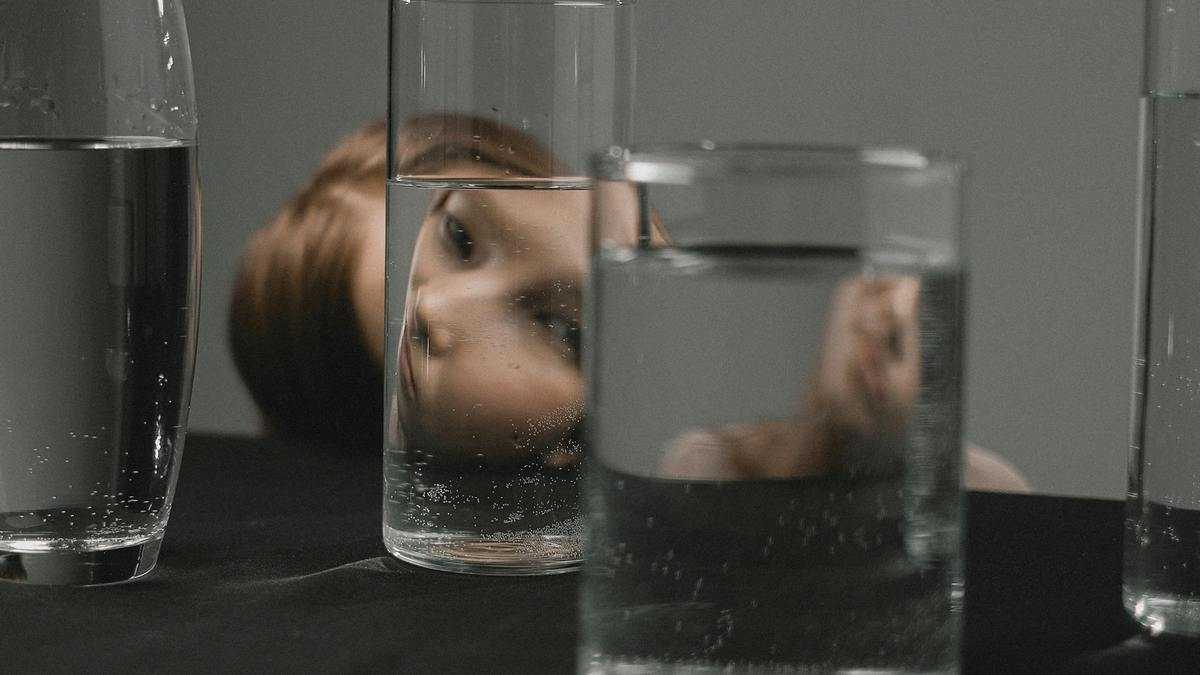

We all like to believe we are rational, logical, and objective. But what if our minds are constantly tricking us, unconsciously shaping our reality to fit our desires and beliefs? It's a humbling thought, but one confirmed not just by poetry, but by science. Cognitive biases are the mental shortcuts we all use to make sense of the world, and they lead us to predictable, and often flawed, conclusions. While it's impossible to completely avoid them, recognizing these common biases is the first step toward making more conscious decisions.

As Polish poet Jonasz Kofta wrote, “We are not who we see in the mirror.” This truth is confirmed not just by poetry, but by science. We deceive ourselves daily, believing we are rational and logical, unaware that we bend reality to fit our desires. But would we change our behavior if we truly understood that cognitive biases drive us?

If we are not who we think we are, are we exactly who others see us as? This perspective is also an oversimplification, as this unconscious distortion of reality affects not only us but also those who judge us.

Everyone, regardless of education or experience, falls prey to cognitive blunders. With a little willingness, you can recognize many of these biases. Here are four common cognitive biases that you’ve likely encountered.

The American psychologist Emily Pronin used the medical term “blind spot” to name this cognitive bias. In medicine, a blind spot is a point on the retina where photoreceptor cells are absent, making it an area insensitive to light.

In psychology, the bias blind spot (BBS) means that our awareness doesn’t shine a light on our own prejudices. An experiment with 600 U.S. residents illustrated this perfectly: over 85% believed they were less biased than the average American. Only one person thought the opposite. The concept was first introduced by Emily Pronin and her colleagues in a 2002 study titled The Bias Blind Spot: Perceptions of Bias in Self Versus Others. While people differ in their level of “blindness” to their biases, almost everyone associates prejudice with something negative. We prefer to think of our own views, judgments, and perceptions as rational, logical, and free from bias—or even original.

This mindset allows us to feel that our decisions are sound or even superior to those of others. But in reality, many of our choices carry a bias or a mental shortcut, even if we are unaware of them. A 2003 study on physicians examined this illusion of objective decision-making. It revealed that doctors didn’t see a connection between the drugs they prescribed and gifts from pharmaceutical companies. They believed their decisions were based purely on science. At the same time, they perceived their colleagues’ prescriptions as strongly influenced by the pharmaceutical industry.

Can we escape this trap of false self-assessment? Psychologists admit it’s not easy. To overcome your own prejudices, you first have to recognize and understand them. However, the BBS doesn’t give up so easily. Spotting your own biases is an exceptionally difficult task because when we search for the truth about ourselves, we are still guided by the very biases we are trying to understand.

The “bias blind spot” can influence our decisions, but whether those decisions are accurate is another matter. When making a choice, we are often guided by past choices that we perceive as better than they actually were. A simple mechanism leads us to this: we think our own decision is “better” because we have much more information about it, unlike the decisions of others, about which we know much less. At least, that’s what our supposedly objective thoughts tell us. This is known as the choice-supportive bias.

You may be interested: Two Kinds of Praise: One Motivates, The Other Hinders Child Development

The difficulty in judging the information others rely on is also evident in another cognitive bias called the curse of knowledge. Albert Einstein famously said that if you can’t explain a complex process in simple terms, you don’t fully understand it yourself. While this is a compelling oversimplification, it doesn’t always reflect reality. The curse of knowledge is present in the reasoning of many outstanding experts. They find it difficult to grasp that others may not have the same level of knowledge on a given topic as they do.

For instance, the famous Polish poet and priest Jan Twardowski described a theology professor who substituted for a preschool religion teacher. After the class, the academic reminded the children: “Remember, God is transcendental.” Similarly, an Olympic gold medalist in volleyball once said that as a coach, he found it hard to understand why a player couldn’t serve correctly.

This bias doesn’t just affect masters in their fields. One of the main communication problems a novice journalist has with a reader is their unconscious assumption that the audience has similar knowledge to their own. As a result, the first draft of an article often contains many shortcuts and omissions, making the narrative unclear and disjointed.

Feeling certain when making decisions and assessing situations is essential when evaluating risk. In this case, it’s easy to fall into another cognitive bias called the pseudocertainty effect.

Psychologists Amos Tversky and Daniel Kahneman conducted an experiment in their famous 1981 paper, The Framing of Decisions and the Psychology of Choice, where over 150 people had to make a risky decision. The scenario was that an epidemic threatened the lives of 600 people. Treatment “A” would save 200 of them. Treatment “B” offered a one-third chance of saving all 600 people, but a two-thirds chance of saving no one. Participants had to decide which method to use.

The expected value for both methods was 200. However, over 70% of respondents chose option “A,” opting for a solution that seemed more certain and predictable. Less than 30% chose method “B.”

Was this a sign of fear of risk? Not entirely. When the task’s wording changed slightly, the choice of the 150 people was completely different. In the new version, treatment “A” would result in the deaths of 400 people. Treatment “B” offered a one-third chance that no one would die and a two-thirds chance that all 600 would die.

In this scenario, nearly 80% of participants recommended method “B.” The vast majority decided it was better to risk the lives of all 600 people than to cause the certain death of 400. This shows that you don’t need to change the parameters; you just need to highlight or obscure the risk to persuade someone to make a decision they perceive as objective and autonomous.

Can we learn to avoid cognitive biases? According to Professor Tomasz Maruszewski of SWPS University, “knowledge of cognitive biases generally doesn’t prevent us from making them.” The problem, as the psychologist points out, is that if we don’t know the consequences of our mistakes, it’s hard to determine if they are based on faulty premises. We learn from our mistakes, and there is no other way.

Read the original article: Nieświadomie wciąż się oszukujemy. 4 pospolite błędy poznawcze