Science

How Did Life Rebound on Earth? The Answer Lies Within the Rocks

23 February 2026

Earlier in 2023, an open letter surfaced unexpectedly on the website of the Future of Life Institute, catching the public’s attention. Notable figures from science and technology, such as Elon Musk, Steve Wozniak, and Yuval Harari, are among its signatories. The letter urges that, in the interest of human well-being, the development of AI models exceeding the capabilities of GPT-4 must be suspended immediately.

This appeal may come as a surprise, given the widespread admiration for the capabilities of ChatGPT, the leading AI-based chatbot. Despite being made publicly available only in November 2022, it already excels at university exams, composes poetry, codes, and provides detailed responses to even the most abstract inquiries. Does this warning from the scientific community have merit, and is it necessary to halt AI development?

“We call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4, including the currently trained GPT-5,” states the letter from the Future of Life Institute. It presents a series of unanswered questions about our envisioned coexistence with artificial intelligence:

It must be conceded that these questions provoke ambivalent reactions, primarily because they are not the stuff of dystopian science fiction, but relate to a chatbot available to all.

There are plenty of dangers associated with the rapid advancement of artificial intelligence. A tool with such extensive potential has demonstrated in a matter of months that it could be a structural menace to several crucial aspects of our lives, starting with the employment sector.

Copywriting, for instance, the craft of producing content for the vast expanse of the internet (including marketing, sales, and blogs), is now classified as a high-risk profession. ChatGPT, wielded by a proficient user, can effectively replace a multitude of copywriters. With a mere flick of the wrist, an entire profession is compelled to refresh its knowledge and adjust to alterations. Failure to do so will result in robots outpacing the competition.

Yet, a more significant challenge has surfaced in the education sector. It is becoming common to hear that artificial intelligence has successfully passed entrance examinations for various universities and is capable of composing academic texts at a satisfactory level. This presents a myriad of challenges for educators, who will have to increase scrutiny of submissions, utilizing tools that can ascertain whether the content was generated by a human or a robot. The ubiquitous access of students to AI has incited protests from educators, resulting in several American states prohibiting its use on school devices.

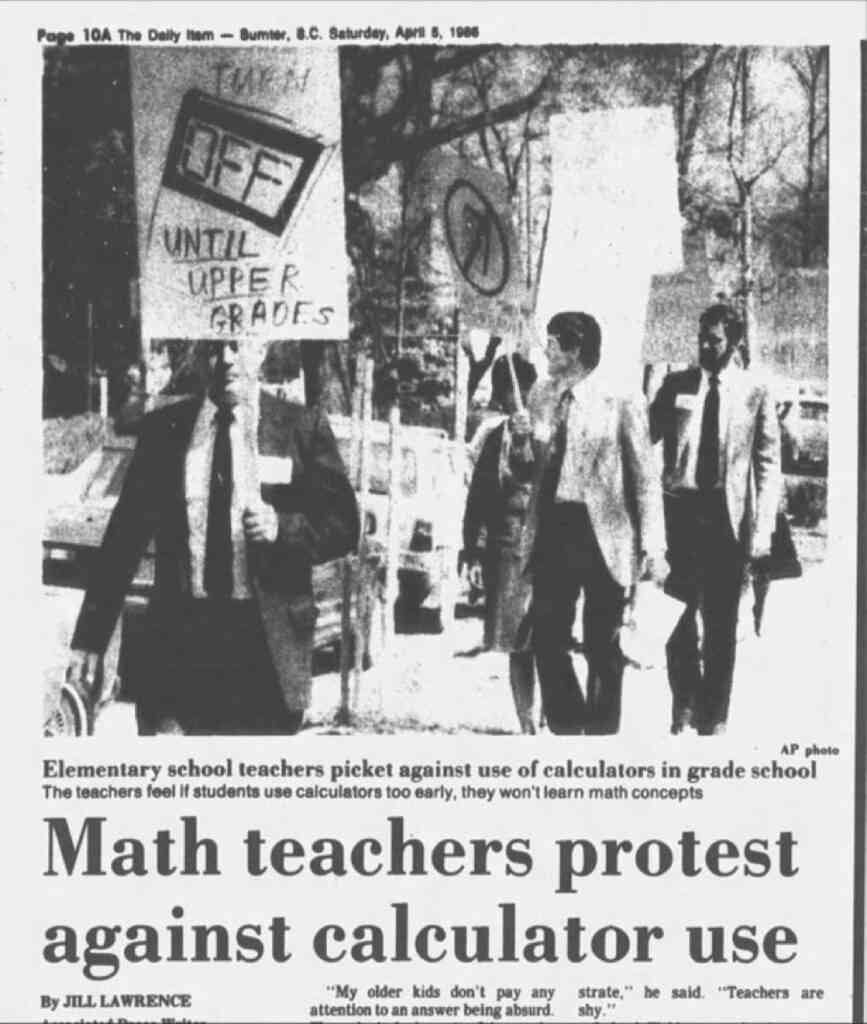

This mirrors a scenario from six decades ago in the United States when teachers opposed the use of calculators. Millennials and older generations may recall math teachers admonishing them to learn arithmetic as they wouldn’t always have a calculator on hand. Today, armed with smartphones, that admonition is met with nothing but smiles.

The current applications of AI represent merely the tip of the iceberg. How many of them are there? The issue is that we are entirely in the dark! This lack of knowledge underpins the scientists’ plea for prudence.

The call for action appears to be fully warranted. This was underscored by Dr. Dorota Szymborska, a philosopher and artificial intelligence (AI) specialist, on her LinkedIn profile:

“From an ethical standpoint, it may be necessary to temporarily halt the development of AI systems more advanced than GPT-4. This would afford scientists, engineers, policymakers, and the broader society the opportunity to reassess the trajectory of these technologies. Such a pause would facilitate a thorough examination of potential repercussions, the danger of relinquishing control over AI systems, and the implications for democracy and the future of work.”

So, what are the specific requests of the scientific community? The authors of the letter elaborate:

“AI developers must work with policymakers to dramatically accelerate development of robust AI governance systems. These should at a minimum include: new and capable regulatory authorities dedicated to AI; oversight and tracking of highly capable AI systems and large pools of computational capability; provenance and watermarking systems to help distinguish real from synthetic and to track model leaks; a robust auditing and certification ecosystem; liability for AI-caused harm; robust public funding for technical AI safety research; and well-resourced institutions for coping with the dramatic economic and political disruptions (especially to democracy) that AI will cause.”

It may seem obvious that there is no room for argument: such advanced technology is simply too hazardous. Regrettably, logic and common sense face a powerful foe, which is greed.

Is it purely coincidental that the open letter was published online almost simultaneously with ChatGPT being connected to the internet? Until recently, the chatbot could analyze an unfathomable volume of data in response to a question, but it could not book a flight, find the best-rated restaurants in an area, or order a taxi. It has only just begun to learn these functions. Simply put, ChatGPT now employs other applications to carry out assigned tasks upon command.

Will common sense and rationality triumph, or will there be a frenzied race for supremacy in the development of a technology that will fundamentally alter our lives? Whether we desire it or not, the answer will be revealed in the not-so-distant future.

Science

22 February 2026

Humanism

22 February 2026

Zmień tryb na ciemny